EDITOR’S NOTE: We welcome this community contribution by Bart Versluijs, also known as bversluijs on the balenaForums. As a constant contributor and prototyper using openbalena, we couldn’t help but have him share some build tips and tricks.

In this post, we’ll be connecting an external AWS S3 provider to openBalena, instead of using the integrated Minio S3 container.

Using openBalena in a production environment has come with some challenges, like scaling and robustness, but also with distributing the data in a safe, cost effective way. This is where using an external AWS S3 provider comes into play.

We, at VeDicium, have been using openbalena for some years now and are actively using it on a daily basis in a production environment. We also enjoy giving back to the community by being active on the balena Forums, including adding support for running openbalena on Kubernetes.

NOTE: You can also use this solution with your fleets on balenaCloud as well. However, this post will focus on setting up AWS S3 instead of running the Minio client. Maybe in the future, I can demonstrate doing this method for a balenaCloud fleet.

Before we dive in, let’s cover some basic information.

What is openbalena?

openbalena is a platform, created by balena, to deploy and manage connected devices running balenaOS. It is a package of battle-tested components that the balena team use in production on balenaCloud for years, serving many large enterprise fleets. It stores device information securely and reliably, allows remote management via a built-in VPN service, and efficiently distributes container images to connected devices. It’s built in the open, inviting any and all open source contributors to test things out and make improvements.

We, and many other community members, use openbalena to manage fleets and to prototype ideas knowing that we have to support or bake in many production-like features. We encourage users who plan on managing devices in a production environment to consider balenaCloud. For example, incredibly useful built-in features, like remote HostOS updates and team organization/role-based access are only available on balenaCloud.

Why use an external AWS S3 provider?

Before we get started, I want to explain why using an external AWS S3 provider is considered best-practice for openbalena when using it in a production environment and why we, at VeDicium, are using it. Although Amazon created S3 object-storage, there are more providers which are S3 compatible, like DigitalOcean Spaces.

The benefits of using an external AWS S3 provider are as follows:

* Cost: This is one of the most important reasons for many businesses. In most cases, using an S3 provider is cheaper than running and maintaining an S3 storage yourself. You only pay for what you use and don’t have to maintain the infrastructure.

* Scalability: It’s much easier to scale than running your own S3 storage. An external S3 provider will store your data and you don’t have to worry about disk space. Next to that, your external S3 provider may also provide the option to distribute the data to more geographical locations for better network performance, as your devices can be all around the world.

* Reliability: Using an external S3 provider is much more reliable than running your own S3 storage. Most external S3 providers will save your data in more than one place and have backup services.

Getting started

In this post, we’re assuming you already have an openbalena instance running and you’re somewhat familiar with AWS S3 storage. If not you don’t have an openbalena instance running yet, you can learn more about that by reading the openbalena quickstart guide.

Creating an S3 bucket

First, you’ve to choose an external S3 provider of your choice. We’ve chosen DigitalOcean Spaces, but here’s a small list of external S3 providers:

* Amazon S3

* DigitalOcean Spaces

* Linode Object Storage

* Vultr Object Storage

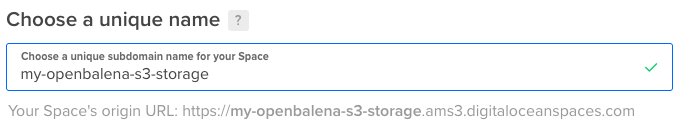

First, you’ve to create a bucket, which is like a “seperate disk”, where you’d like to store your information. You’ve to select your region and give it a name. We’re using my-openbalena-s3-storage in the AMS3 datacenter.

Now that we’ve created our S3 bucket, we’ll need to create credentials for it. We’ll need a key and secret. This will be different for every S3 provider how to obtain this. When using DigitalOcean Spaces, we’ll need to go to “API -> Scroll down -> Spaces access keys’” and generate a new key by using the button on the right.

When generating a new access key, you can provide a name. This is just for your own information and will not be needed when connecting the openBalena instance to the S3 bucket. So give it a name and you’ll get a key and a secret.

Modifying openbalena

Now that we’ve set up an S3 bucket on an external S3 provider, we’ve to change our openBalena configuration. We’ll use the appropriate files for this, so in case of an upgrade, it’s most likely that those changes won’t be affected.

Head to the file config/docker-compose.yaml in your openBalena directory. Which will show the following:

“`yaml

Project-specific config.

All paths must be defined relative to compose/services.yml regardless of

the location of this file, i.e. refer to my-open-balena-checkout/somedir

as ../somedir. This is because of the way docker-compose handles paths

when specifying multiple configs and open-balena always specifying

compose/services.yml as the “base” config.

You may view the effective config with scripts/compose config.

version: “2.0”

“`

Now, add the following to the bottom of the file, replacing the “<<>>” variables with your S3 bucket details:

“`yaml

services:

# Overwriting the environment variables to use the external S3 bucket

registry:

environment:

REGISTRY2_S3_BUCKET: <

REGISTRY2_S3_KEY: <

REGISTRY2_S3_REGION_ENDPOINT: <

REGISTRY2_S3_SECRET: <

# Disable the S3 Minio container, because we won’t need it anymore

s3:

entrypoint: [“echo”, “External S3 used”]

restart: “no”

“`

After that, run the following command:

$ ./scripts/compose up -d

And if everything is set up well, the registry will use the external S3 bucket from now on.

Congratulations!

Bonus: Changing the storage path

By default, the registry will save everything in the /data folder in your S3 bucket. But maybe you’re using an existing bucket or want to use the bucket for more openBalena-related data, like storing balenaOS images. Then it might be a good idea to change the path of the registry data.

We’re going to change the registry data path to /balena/registry by adding the following to the config/docker-compose.yaml file:

yaml

services:

registry:

environment:

# Storage path change

REGISTRY2_STORAGEPATH: /balena/registry

Bonus: Proxying all traffic through the registry

By default, the registry won’t handle your requests directly but redirect it to the S3 bucket which stores the data. For instance, a connected device will ask for a Docker image and the registry will redirect you to the S3 bucket to handle the download. But sometimes you’ve to deal with hardened firewalls or you’d like to just handle all traffic via your own openBalena domain.

The registry has a feature for this which will function as a proxy. Instead of redirecting the device to download the image directly from the S3 bucket, it’ll request the image itself and stream the result to the device. This will prevent the device from ever connecting to the external S3 bucket directly.

To do this, we’ve to enable a function by adding the following to the config/docker-compose.yaml file:

yaml

services:

registry:

environment:

# Disable redirecting to the bucket

REGISTRY2_DISABLE_REDIRECT: “true”

What’s next?

This is a first step in making your openbalena instance more robust and, probably, lower the costs of running it. But there are still improvements to be made to make openbalena a more suitable solution for your business, like understanding all services, scaling and the purpose of all certificates and how to manage them.

As I mentioned above, one way to get premium production features is to create an official balenaCloud account, which you can test with ten free, fully-featured devices. You should be able to use the steps above to connect your balenaCloud device to AWS S3 as well.

Onward, fellow openbalena users!

Let us know how you’re using openbalena, why you’re using it, and give us some feedback on this use case. We’re very curious on what hardware you’re running your instance on, how many devices you’re managing and in what environment.

For more questions, please post them on the openbalena section of the balenaForums.

Found bugs? Report them in the openbalena repo or fix them yourself, everything is open- source!

Keep an eye on the blog for more openbalena goodness.