As the name implies, textbook solutions are great solvers of textbook problems. Yet, production cases often introduce an unusual twist, setting you up against something unique. The existing “one size fits all” solutions indeed work, but, most of the time, for the price of a bitter aftertaste from paying for the underutilized functionality while one or two truly desired features are still lacking.

That unfortunate impression of the world order changes 180 degrees when introducing yourself to an open-source world. It is a bit different of a reality with its own rules. Once you learn how to catch a wave, you are destined for slim solutions that respond to every item from your requirement list.

In addition, as a sweet bonus, an inspiration for something outstanding often hides behind the corner while you surf the open-source realm.

Theia Scientific

Theia Scientific applies Internet of Things (IoT)-related technologies and solutions to problematic scientific image processing and analysis workflows in the energy, materials, and life sciences fields of Research and Development (R&D). Part of Theia Scientific’s mission is to bring the IoT to laboratories for automated data analysis, management, and visualization.

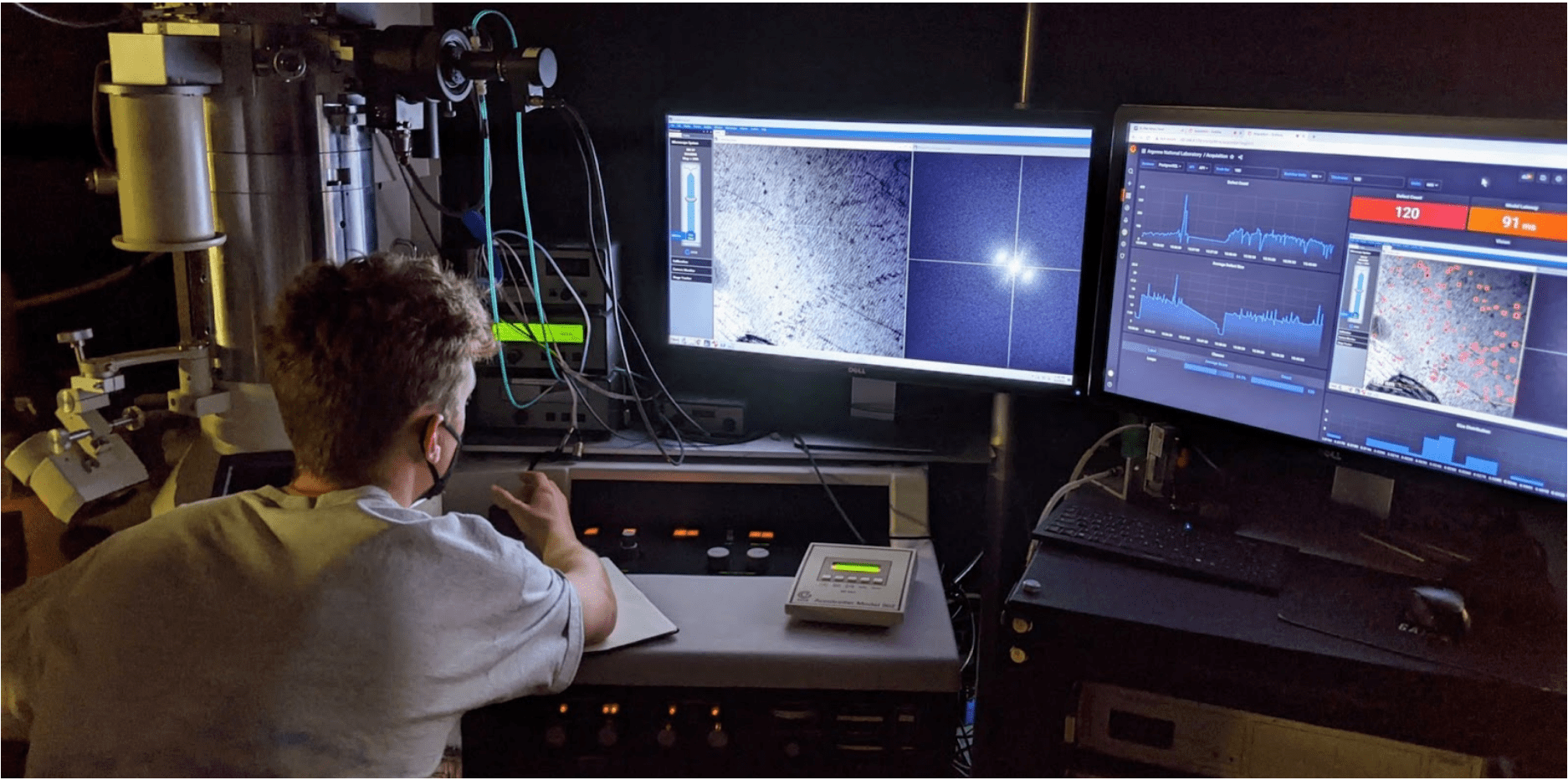

Microscopes and processing acquired images to make scientific discoveries and engineering decisions are an integral part of nearly all areas of R&D. But, microscopy images are manually analyzed and quantitated by scientists and engineers. This is a necessary but tedious task that is a major time sink and introduces human bias into results.

Recent advances in Artificial Intelligence and Machine Learning (AI/ML) have reached human performance in accuracy in analyzing and quantifying images with reduced bias and at per-image speeds greater than human ability. Thus, it is possible to automate the tedious, bias-inducing microscopy image analysis workflow of scientists and engineers using AI/ML technologies.

However, AI/ML technologies typically require Cloud resources or big High-Performance Computing (HPC) grade equipment. Still, microscopes and laboratories generally do not have access to the Internet because of logistics, security, or IT policies, nor the space for a large, multi-node cluster of HPC grade and size equipment.

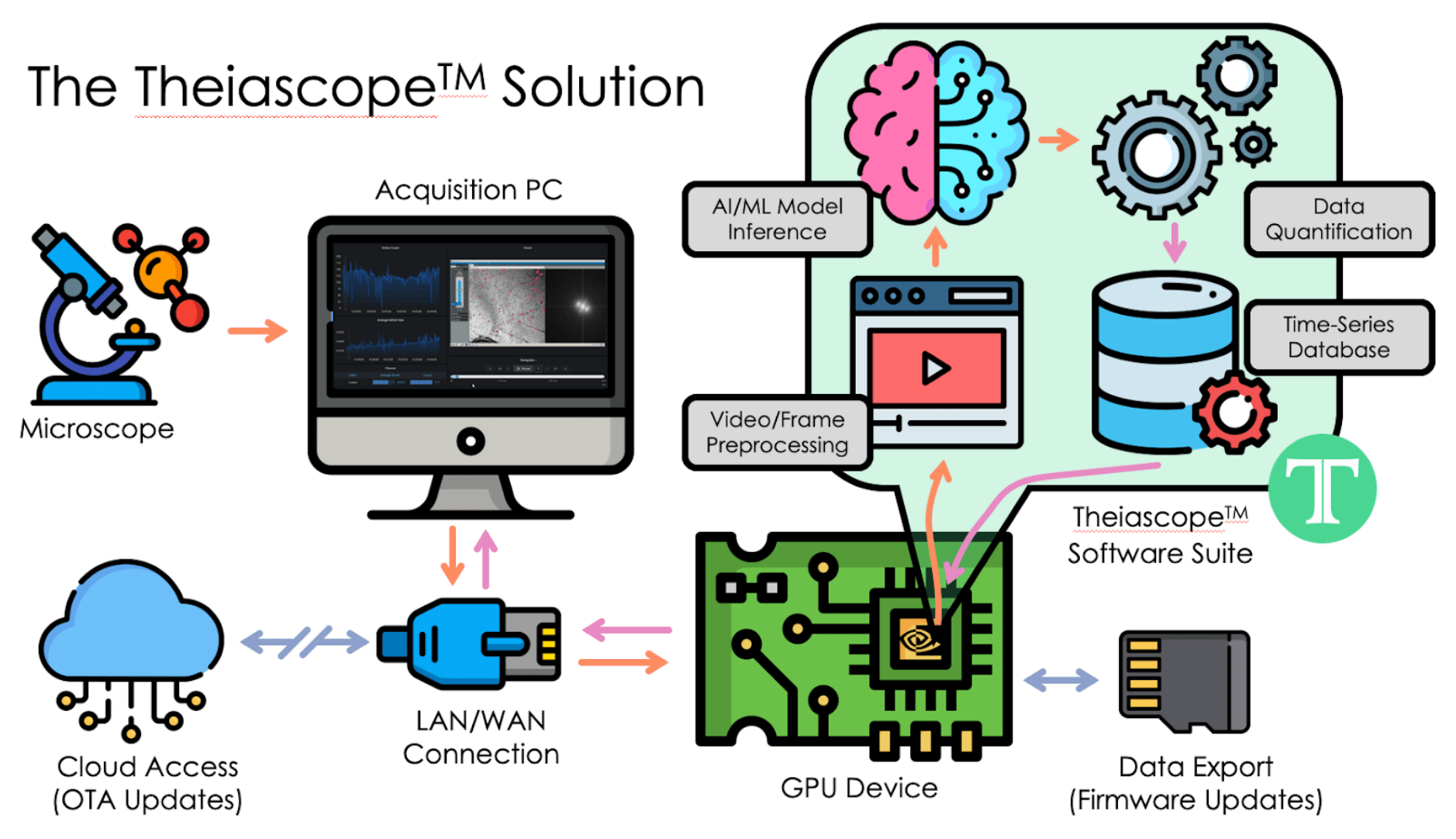

This situation of microscopes being unable to access AI/ML-powered image analysis technologies led to the development of the Theiascope™ platform, which combines IoT devices and balena fleet management with Grafana for scientific experiment observability at a microscope. The novel combination and usage of open-source software transforms the microscopy image analysis workflow for scientists and engineers and brings IoT into the laboratory.

If IoT devices can be used to automate factories, greenhouses, power plants, and homes, then it should be possible to use IoT devices and technologies to automate laboratories. This is how Theia Scientific found balena to address the need to manage the network of state-of-the-art complex laboratory devices, such as electron microscopes.

A tedious part of a microscopist’s work is visually assessing the elements, a.k.a. features, in the field-of-view of a microscope. Meanwhile, advances in AI/ML for object detection from cameras and mobile phones were hollering loudly, offering to improve the quality of quantitation and performance (speed of the image processing).

Theia Scientific heard the AI/ML call and found a way to combine a scientific microscope with AI/ML algorithms to tackle the scientific microscope image analysis in real-time. This novel solution became known as the Theiascope™ and is a clever combination of open-source software to address a long-needed problem for scientists and engineers, such that, once it is deployed, it is hard to picture using a microscope without one.

balenaCloud

Many laboratories have more than one microscope and more than one type of microscope. This quantity and variety are useful for conducting a broad spectrum of experiments and capturing a wide range of images that need to be analyzed. Institutions and facilities with multiple microscopes often refer to them as “fleets”. Similarly, a collection of IoT devices is called a “fleet”. Hence, balenaCloud is one element used with the Theiascope™ platform for fleet management.

balenaCloud allows remote updates, control, and support of Theiascopes™ deployed in any laboratory worldwide.

Grafana

Grafana is another element. Initially, it was designed as a web application to provide interactive system observability. However, thanks to its brilliant architecture, it could easily be viewed from a different perspective. From an unorthodox point of view, it is a platform where one can stack plugins, i.e., virtually any imaginable feature, to meet the requirements of one’s use case.

Being a multi-faceted platform, thus, allowing almost any “crazy” requirement to be met, Grafana made it possible to incorporate a balena application and environment data source. The environment data source reads the environment variables of any host where Grafana is installed and exposes them in a Grafana dashboard. This approach simplifies the workflow since it removes the need to deep dive into the system configuration files of every device in the network.

The balena application plugin is an add-on functionality for Grafana that allows maintaining an IoT device directly. That is particularly valuable when your fleet consists of heterogeneous devices, meaning devices with different specifications, configurations, and possibly a designated admin or operator.

The plugin provides a view into device logs, update progress, and even allows a remote device reboot. As can be seen, the balena application plugin illuminates the balena platform with offbeat colors.

balena, Grafana, and the balena application plugin simplify managing a fleet of heterogeneous devices. If needed, a device could be made accessible directly, which means Internet access is not required.

NVIDIA Jetson AGX Orin Devkit

NVIDIA Jetson AGX Orin modules deliver up to 275 TOPS of AI performance. This gives up to 8X performance over the Jetson AGX Xavier in the same compact form factor for robotics and other autonomous machine use cases.

While waiting for the support of the balenaOS for the Jetson AGX Orin Devkit, the team at Theia Scientific built and tested custom application containers using the preinstalled Linux Ubuntu OS.

Experience running balenaOS on the Jetson AGX Orin

As soon as the balenaOS image became available in the balena Staging environment, the team downloaded and flashed images to their test devices. The Jetson AGX Orin Devkit with the balenaOS installed booted up and started bringing up containers, but the application failed to start.

The first balenaOS development images were missing NFS version 4 client modules, which the Theiascope™ platform heavily utilizes to share files between containers. The next day, the balena team added NFSv4 support, and the team at Theia Scientific was able to build their own balenaOS image following the custom build instructions.

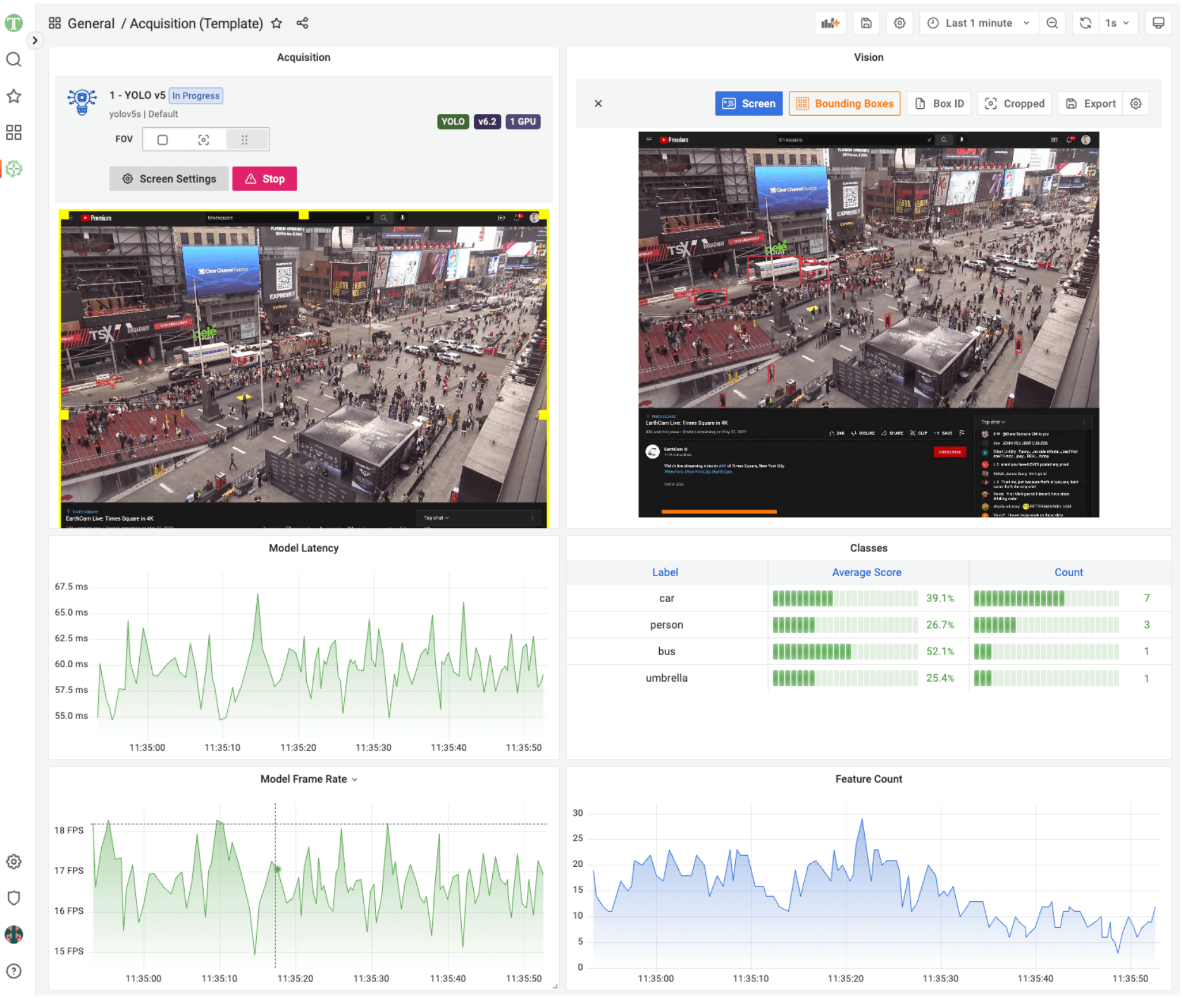

This time all containers started up and were ready to go. For tests,YOLOv5, the world’s most loved vision AI object detection model was used and a webcam overlooking Times Square in New York City served as the reference image feed. The Model Frame Rate panel displays the inference rate in Frames-per-Second (FPS) to indicate the model performance.

Amazingly, a 2x improvement in inference performance without any optimizations done to the model compared to the Jetson AGX Xavier devkit was observed. The Jetson AGX Orin ran the YOLOv5 inference model at 18 FPS while the same model ran on the Jetson AGX Xavier at 9 FPS. Further refinement to the inference pipeline and some initial application optimizations have continued to increase the inference speeds with inference speeds up to 30 FPS now readily achievable using the NVIDIA Jetson AGX Orin.

Ad-Hoc Heterogeneous Cluster Deployment

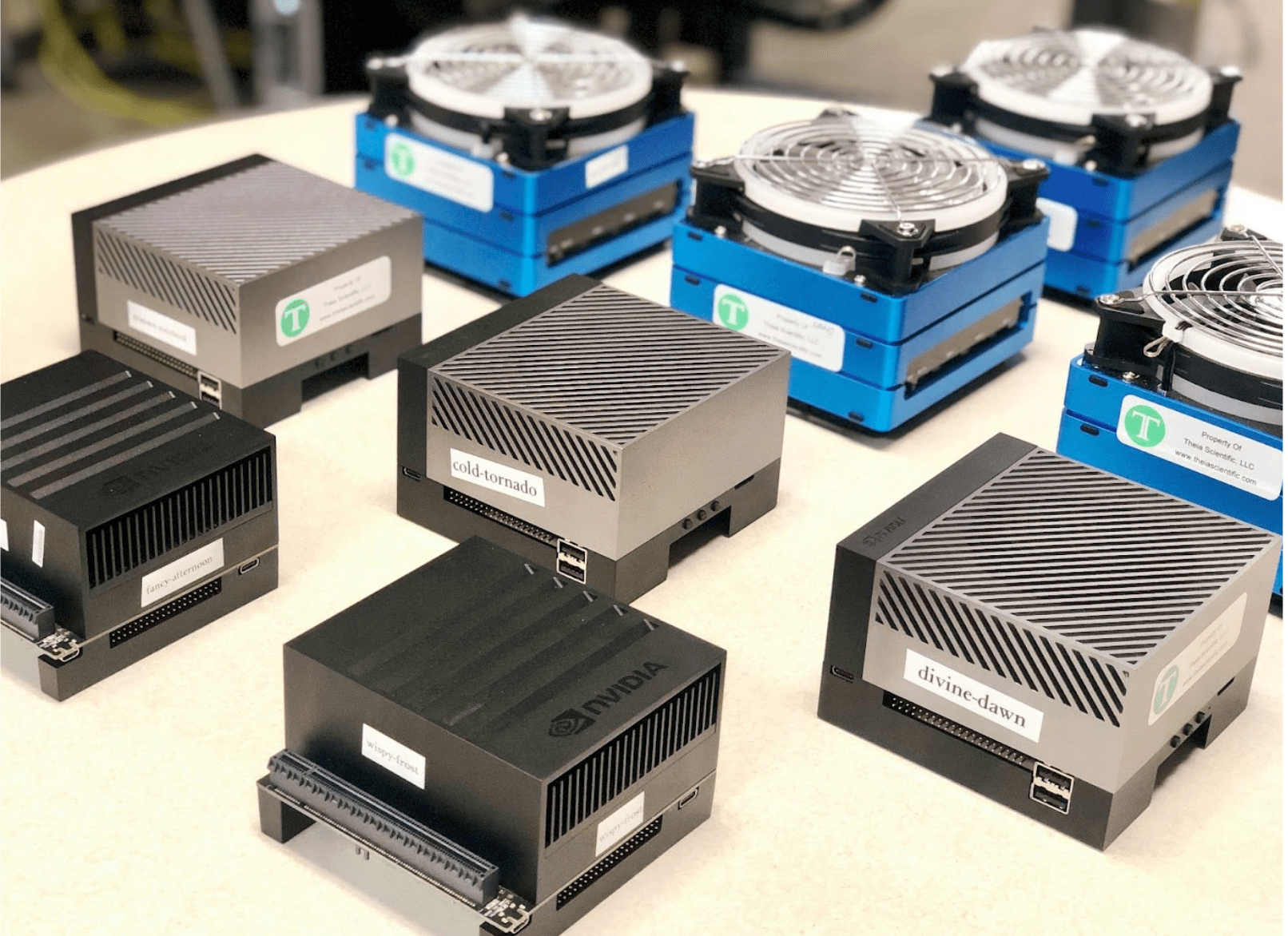

With resulting success of adding support for the NVIDIA Jetson AGX Orin Devkit and the improvement in inference performance, Theia Scientific and Volkov Labs visited the University of Michigan – Ann Arbor to deploy more than 20 devices consisting of Jetson AGX Orins, Jetson AGX Xaviers, and Jetson Xavier NXs in the Seeed Studio Jetson Mate Advanced form factor across three sites on the engineering campus.

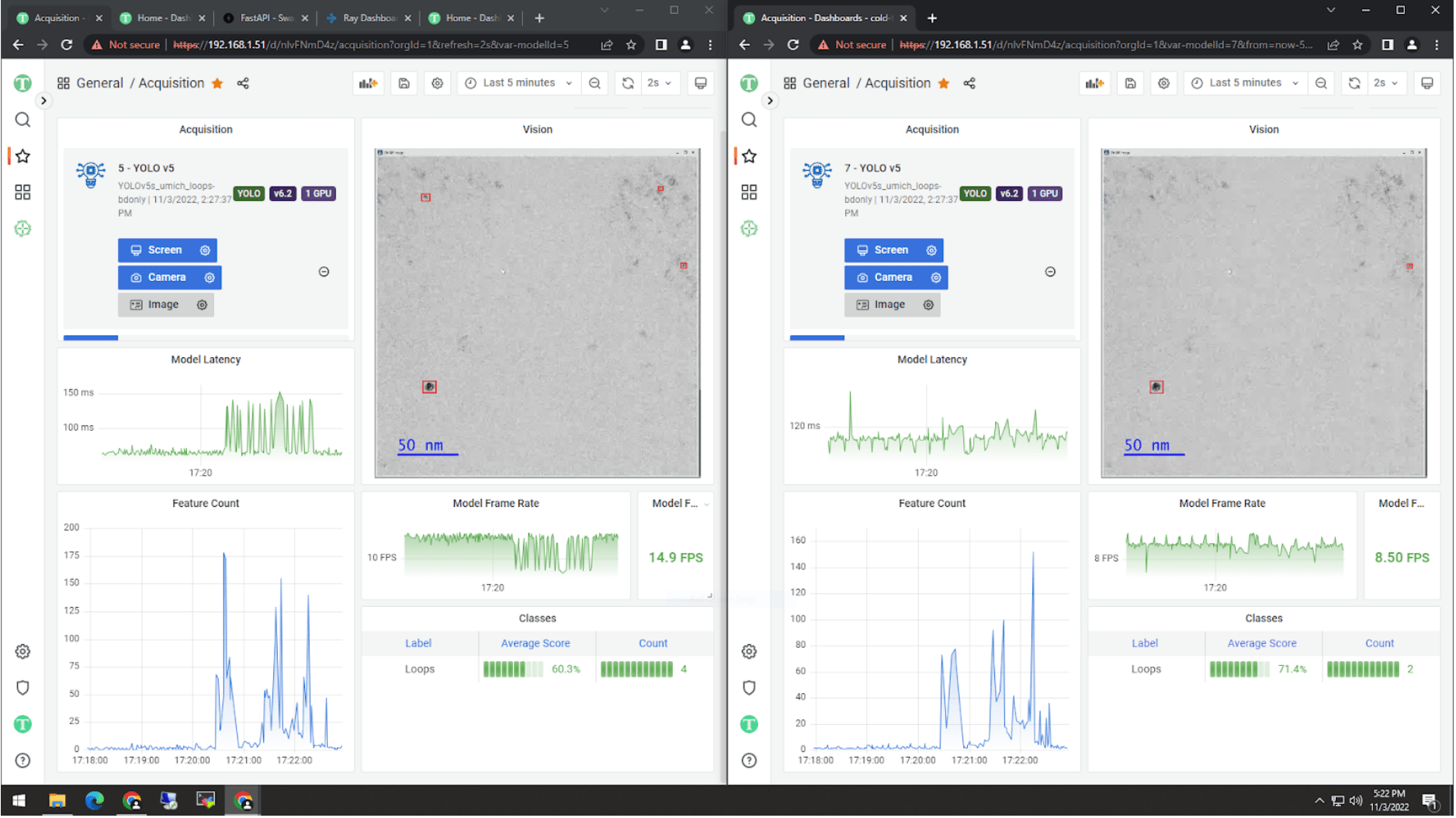

Working with NOMElab at the University of Michigan, real-time automated microscopy image analysis and quantitation with multiple parallel inference models was successfully achieved with two separate state-of-the-art electron microscopes.

The 20+ devices act as a “hive” and Graphical Processing Units (GPUs) from any node in the cluster/hive are dynamically utilized to run multiple models while scientists and engineers run an electron microscope.

Real-time quantitative results are displayed to the users for each model as a series of user-customizable dashboards using the plethora of visualization from Grafana. Using the power of the balena fleet management system and the architecture of the Theiascope platform, updates with new features, AI model frameworks, and bug fixes are seamlessly pushed to all devices in the fleet deployed at the University of Michigan. This creates a close, tight feedback loop between users of electron microscopes and Theia Scientific.

The 20+ devices are a mixture of ARM and x86-64 CPUs as well as a variety of GPUs from NVIDIA, including desktop or workstation-grade GPUs. So, not only is real-time microscopy image analysis achieved with different electron microscopes but also different types of CPUs and GPUs. Thus, the fleet is truly heterogeneous and combined with the dynamic usage and distribution of compute resources, the fleet is also “ad hoc”.

An interesting outcome of having Jetson AGX Orin support and being able to handle different types and form factors of GPUs was the ability to add a desktop GPU to a Jetson AGX Orin to create a near-space, two-node GPU heterogeneous cluster, which was nicknamed the “Hot Rod” because of the custom, after-market modification and construction. This was a demonstration of the flexibility of the Theiascope™ platform, but it was not deployed at the University of Michigan. Instead, a multi-GPU workstation was converted to a Theiascope based on the knowledge and experience gained with demonstrating the Hot Rod configuration.

Conclusion

If you are ready to start exploring on your own, check out the team’s balena application project with the integrated Balena Application plugin for your convenience on balenaHub. While you’reyour at it check of Theia Scientific and Volkov Lab’s capabilities.

Another technology, as mentioned, relies on the NFS Server to share external storage between containers, which was discussed in the balena Bblog post about using NFS server to share external storage between containers.

The world of IoT devices is fascinating. There is a huge demand for IoT systems and servicing software. We are happy to share our solutions and experience with the balena communities.

Hi. What was the image size for which the fps numbers are shown in this thread? thanks!

Good question @thephee

@Mikhail do you have answer to this question?

@thephee We usually capture images at 1080p and then scale to 640×640 for processing.

Are you doing similar AI processing? What do you use and what is your fps?