In this post, we’ll show you how we use our own platform to build our infrastructure and provide our engineering team with a reliable development environment. Find out how we optimised and refined the configuration of our software stack so the entire balenaCloud platform can run on balenaCloud and even on the balenaFin.

Project BoB – Meta-Platforming Ourselves

About a year ago, I was chatting with our CEO Alex Marinos, and he returned to something we’d been talking about on and off for ages: why couldn’t we just run our development environment (Devenv) and On-Premises product on a device provisioned with balenaOS? After all, we’d just launched multicontainer and Docker Compose support for microservices applications and, in theory at least, had everything required for executing the entire balenaCloud product, which is already deployed as a set of Docker service containers.

We decided that perhaps it was the right time to invest some work looking into exactly why we weren’t able to run our stack on balenaOS. We picked an Intel NUC with a modest amount of memory (16GB) and an i5 CPU as the target for running a local instance of balenaCloud.

Project Balena-on-Balena, or what we like to call “BoB”, was born!

But why would we want to do this in the first place? To understand that, you need to know a little of the history of our development environment.

The Devenv – A History

Here at balena, we have historically undertaken most of our development work (especially for the product’s backend services) in a Devenv. Due to the wide range of experience and specialties of our engineers, we allow them to select and manage which Operating System they use for their day-to-day work. There’s a pretty even split between those of us using MacOS, and those of us using Linux (and a few Windows users).

Appropriately, we need a Devenv that can function on all of these platforms. This consists of a Virtual Machine (usually a VirtualBox instance controlled via Vagrant). This is not an unusual choice in the world of fullstack development, but it does bring with it some downsides:

- Upfront resource (CPU, memory and to a degree, disk) ‘stolen’ by the VM

- Vagrant and VirtualBox versions frequently conflict

- Supporting individual users when they have an issue can become very complicated, due to varying setups on their development machine

- Static networking setup; for example DNS is set statically with a known IP and hostname

Resources are an especially pressing concern here, as the more work that is undertaken in the VM-based Devenv, the more space it consumes. Factoring in that engineers may have multiple Devenvs present on their machines at the same time (as they may be working on several issues that may require different networking, device types, etc.) and storage space starts to come at a premium.

The balena backend itself consists of a number of Docker service containers which are hosted by a cloud platform, behind appropriate load balancers. These services include the API, the VPN, image builders, and so forth. It’s fairly easy to emulate this in the Devenv by installing Docker and Docker Compose and then carrying out some clever ‘hacks’ to ensure networking functions both internally and externally of the VM.

A diagram detailing the VM-based setup used to date:

We have also used this Devenv setup as the basis for our On-Premises solution by providing a heavily modified and user-friendly version for On-Premises customers. Whilst this gives the customer something akin to our own Devenv, a large amount of work is required to ensure that it works on multiple targets. On-Premises version updates are also very large due to the nature of the VM-based approach.

After starting at balena, I rapidly elected to maintain the Devenv and made several changes that helped it become easier to setup and use. However, due to the variation in engineers choice of platforms, networking and other factors, it became increasingly clear that there was a huge difference between supporting approximately twenty engineers at the time and the more than forty that we employ now. This was not an ideal situation, and clearly something had to change.

A move away from a Devenv based around a VM to a platform where development could occur on balenaOS would allow us to remove a wide range of support issues, allowing us to provide a consistent platform for all engineers. We would, in effect, become our own customer.

Digging Into The Stack

The first challenge was immediately obvious. We didn’t want to have separate versions of our services running in our cloud product and on balenaOS, so we needed to ensure that the service images we built would run without any changes regardless of underlying platform.

Upon investigation, we discovered that some of our services hadn’t moved to the latest version of our base container (open-balena-base), which ended up causing issues. As part of the move to balenaOS, we wanted to remove any reliance on both host networking and privileged containers, and this required features such as the latest systemd changes present in Debian Stretch (moving away from Jessie). We ended up picking through all of our services, ensuring all of them were able to run under Stretch, updating or rebuilding dependencies as we went, and ensuring that they were no longer able to fall behind on their base versions. We now had a clean set of backend services, all using the same base image, with which to work with.

The networking requirements also proved interesting; the VM’s networking had been setup in such a way that there were known, static IP addresses for all components, and this obviously was not a viable setup going forward. Whilst running BoB on balenaOS was a requirement, potentially it should also run on multiple flavours of OS and networking, as this would ensure as flexible a system as possible. As we use HAProxy as a load balancer and ingress for the services in the Devenv, it was fairly simple to get to a point where a default Docker bridge could be used, and HAProxy moved from host networking (as it had worked in the Devenv) to bridge networking on any Docker supported platform.

In addition to heavily modifying the services and infrastructure to make them platform agnostic, we also made several improvements to the system as a whole. These included such items as:

- Self-signed CA support – No mean feat given that applications such as NodeJS (which our on-device Supervisor uses) utilise their own certificate stores

- Zero configuration DNS support via Avahi (allowing the use of

.localdomains), across both the backend and devices - Improved logging output to ensure that the Supervisor could output all service logs to the Dashboard/

balena-cli

After about six months work, we were in a position where not only could we run the entire balenaCloud product as a multicontainer application on a provisioned device, but we could even provision more devices from that application, each running BoB. At one point, we had six levels of BoB running, each with devices themselves chained to a parent instance of BoB and themselves running BoB. As the saying goes, ‘Turtles all the way down’.

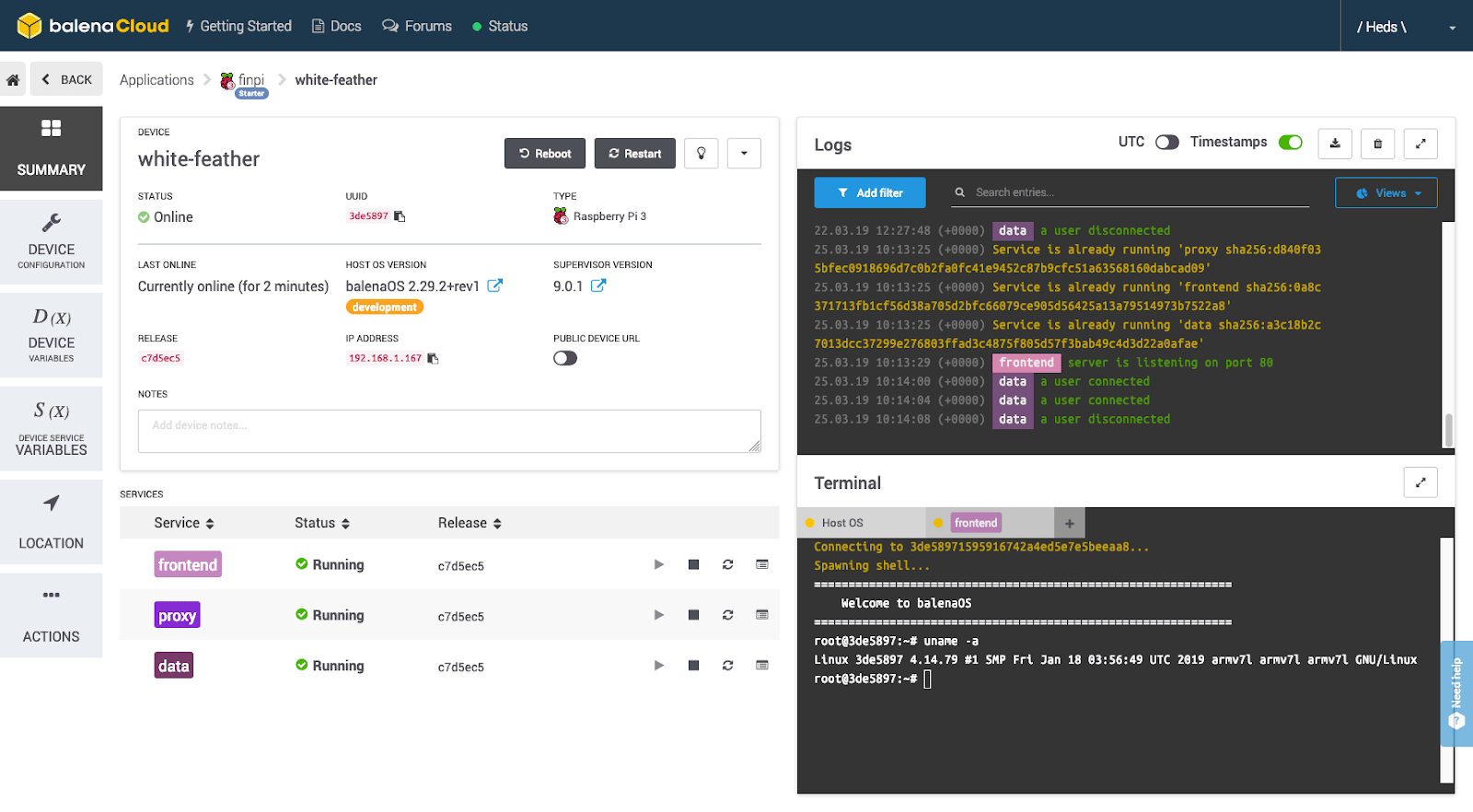

You may notice the type of device the balenaCloud backend is running on in that screen capture… more on that later.

Further work included tooling to allow us to quickly instantiate instances of BoB, and extra functionality (such as installing required device types to allowing provisioning).

A BoB instance could be deployed to any provisioned x64 device using the balena CLI utility with a simple balena push or balena deploy.

We were now fully consuming our own dogfood. The work had touched almost all the moving parts of our product, from our backend services to the Supervisor and even balenaOS. We’d found minor, and major, issues which may have potentially ‘bitten’ us in the future and successfully resolved them. We now had a stack that was able to run on multiple platforms without any extra work required, which resulted in much more rapid and stable development.

BoB Based Devenv and On-Prem

This work has enabled us to carry out a few important things. Firstly, we can remove the requirement for a VM-based Devenv, and instead supply engineers with a very reasonably priced x64 based box, such as an Intel NUC, as a development environment. Instead of having to allocate set resources on their development machines, they instead have a dedicated piece of hardware that acts as a local instance of our entire infrastructure to develop on.

Secondly, it means that we can offer customers On-Premises versions of balenaCloud which are updated as easily as pushing a new application, as they are managed as ‘just another’ balenaCloud provisioned device. This allows us to supply OnPrem solutions to them on either a dedicated piece of hardware or, with minor configuration changes, on any virtualization platform they wish to utilise installable via an image file preloaded with the BoB application. Additionally, should they require airgapped networks, we can use a NUC as a parent balenaCloud instance to initially provision, and later update, the OnPrem instance in-house at a customer location and supply them with an initial BoB pre-loaded image.

Support also becomes greatly simplified, as it consists of being able to use our own product, instead of battling with custom platform setups. We can offer a simple set of instructions to engineers for provisioning a Devenv, and then modifying the Docker Compose file for developing the service container they’re interested in (using device local mode). We can also push frequent updates to the main application to ensure that every engineer is working on the same codebase. There’s also another fantastic benefit. Should an engineer have trouble with the Devenv, it allows us to use the balenaCloud service to SSH into their Devenv (via its VPN) and investigate what might be occurring. This is a massive advantage, which previously would have required the engineer to have installed other tools to allow access, also potentially decreasing their networking security. The same holds true for On-Premises offerings.

A diagram detailing the new, simpler and consistent BoB-based Devenv is shown below:

This moves us closer to being able to use the same tooling internally as our users do externally, which can only further benefit both balena and our users.

FinBoB

At balena, we run what are known as ‘Hack Fridays’. Every Friday everyone in the company has the opportunity to switch to a fun project they want to work on, as long as it uses our products in some way (a great way to carry out extra user testing, as well!). In the past, people have built a variety of cool things, and it’s well worth checking out the balena playground repos to have a look at these.

Recently, I wanted to try and do something a bit more… involved for a Hack Friday, and embarked on something that ultimately took three Fridays. I wanted to try and run BoB on a Fin. If you don’t know about the balena Fin, here’s a blog post about it (and if you want to buy one, here’s a link to the store). The Fin is a Raspberry Pi Compute Module 3/3+ compatible carrier board, and given the ubiquity of the Pi, what better device to try and run our backend on!

The Fin is available in several flavours, the most fitting for BoB being the 64GB eMMC version, as this gave plenty of storage room for all the backend services as well as all associated data.

To speed up the work, I created a local instance of a BoB running on a NUC, and then provisioned a Fin against it, allowing me to quickly push code to it across my local network. I dived into examining what would need alteration to run balenaCloud as a multicontainer application on the Fin.

Initially, it became obvious that this wasn’t just going to be a case of including ARMv7 versions of Debian Stretch in our base images. A number of dependencies for our services either weren’t packaged for the ARM version or needed extra support to build. I set about attempting to get a barebones version running; an underlying database, the API and the UI. This was achieved by the end of the first Hack Friday, by which point I had a good idea of the other components that would need modification to move forwards.

Gradually, over the course of the next Friday, several other services (the Image Maker, our Git server and a few others) fell into place, and by the end of that day it was possible to sign-up, create a new application and download a config with which to provision a device (although the device could only register itself, and not connect to the VPN or download an application).

It was at this point that some limitations of the hardware had started to become apparent.

Cooling was a major factor. The BCM2837 SoC, which the Compute Module 3 is built around, has thermal runaway protection built in, where it reduces the clock speed when it hits 80 degrees centigrade. Unfortunately, it looked like exactly that was happening, as when all the currently ported services were running, the temperature consistently hit 80C and the heat radiating off it was noticeable, as was slowdown. Initially, it was being run inside a case, and this was quickly removed and a desk fan pointed at the Fin’s PCB. But it wasn’t an ideal situation.

Luckily, at the same time, the fabulous Fin team had added support for the CM3+, which uses a BCM2837B0 SoC with a better thermal profile, as well as a better profile for the PCB itself. I was lucky enough to be shipped one by the team, and the difference was obvious. I was able to run the system under 80C, mostly at a consistent 70-75C. The Fin team is also currently working on a new aluminium case design, which will greatly improve passive cooling for the CM3(+). I expect this to have yet another dramatic effect on the temperature range when running FinBoB.

The other problem was that of RAM. The CM3+ only has 1GB of RAM, and the backend running at that point took around 980MB of it. I wasn’t going to be able to fit in the VPN, Docker Registry or Proxy services that would let a provisioned device communicate with FinBoB (and in fact was amazed at how well we’d manage to reduce our service size to get the current number of services into 1GB).

So instead I took a different tack, and wondered how slow swap would be. Creating a simple swapfile on the /mnt/data partition, enabling it and adding it to the filesystem table to allow it to be used at boot, worked without issues. It turns out that the eMMC on the Fin, which is industrial-grade, was more than sufficient for the use of swap. There was no appreciable speed difference.

With swap enabled and a cooler-running Compute Module, the final Friday saw almost all the balenaCloud backend services executing. Finally, the full experience could be carried out on FinBob; creating an application, provisioning a device (a Raspberry Pi 3, of course!) and pushing code to the device. The following screen capture shows the Dashboard of a BoB running on a balenaFin provisioned from balenaCloud, with a provisioned Raspberry Pi 3 attached running our standard multicontainer demo application.

The FinBob project has also yielded some interesting results that can be rolled back into our main product, as there were several issues with moving to a different architecture that can be investigated and fixed. This work is currently in-progress, but potentially should be an interesting exploration into some Linux architecture differences. We may well blog about these in the future!

The Future of BoB

As well as running on balenaOS, the BoB project has allowed us to start using our own product more as an engineering team, and this in turn allows us to use the product to develop itself. This has started to see great returns, and we’re attempting to move closer and closer to an internal development model where we use exactly the same tooling we provide our customers.

We’re currently in the process of developing some features in balena-cli which will see us able to carry out rapid engineering using BoB, and which we can roll out to our customers for their own development.

Watch this space!

Thanks for reading! We hope you enjoyed learning more about how our infrastructure works and how we develop balena on balena. If this post has raised any questions or you’d like to know more, please join us in our forums, on Twitter @balena_io, on Instagram @balena_io or on Facebook where we’d be more than happy to answer!