Automated testing of balenaOS ensures that we can continuously deliver new features, address bugs, and support new device types in a timely manner. Here at balena, we utilize hardware-in-the-loop and virtualized testing. In this blog post we will give a high level rundown of the tools and pipeline that we’ve developed to achieve that!

What is balenaOS?

balenaOS is an embedded linux operating system that has been optimized for running containers on embedded devices, it’s a key part of the balena platform. Having an OS as part of the balena stack also allows us to abstract away lower level details of the hardware connected to balenaCloud, and provide a standardized interface across many different device types.

BalenaOS is mandatory for devices that are connected to balenaCloud. This means that in order for a large range of hardware to be compatible with balenaCloud, balenaOS must be ported to all of these devices.We already support over 90+ distinct device types on balenaCloud, and that number is growing every day. We are also committed to delivering continual updates of the OS, to deliver new features to our users and address any bugs or required fixes.

The challenge of releasing an OS for hundreds of devices

This leads to a significant testing challenge. For every device that balenaOS is ported to, new OS releases must be tested on each one before being released. The up front cost to support a device in the first place is fixed, but ongoing testing and maintenance has no upper bound (unless the device is discontinued). We create more of a testing load with every device that we add.

Manually carrying out these tests is not practical! It wastes the time of our OS engineers, slows down the rate at which we can release new versions of the OS, and is prone to human error. To combat these challenges, we’ve been developing an automated solution to this problem.

Our testing toolkit

AutoKit – Testing balenaOS on physical devices

Our Requirements

To test balenaOS works as expected on a device, we have to test it on the physical hardware that it has to run on. This means we needed a way to interact with the device in an automated, hands free way. To test a draft version of balenaOS, we needed a way to flash devices with this OS, remotely and automatically. Depending on the device, this meant that we needed to automate the following:

- Flashing an sd card, and placing it into the sd card slot of the device under test (DUT)

- Controlling the power to the DUT

- Detecting if the DUT is on or off

- Toggling a switch on the DUT to select its boot mode

- Simulate the plugging and unplugging of a USB cable

This would provide the bare minimum needed to flash, provision & boot the draft OS version onto the DUT, ready to be tested. Then, in order to test specific features of the OS, we also needed some extra capabilities:

- Display capture

- Controllable wifi and ethernet connection supplied to the DUT

- Serial communication

- Ability to interact with other interfaces on the DUT

- A means to add new hardware to respond to future requirements

Our Solution

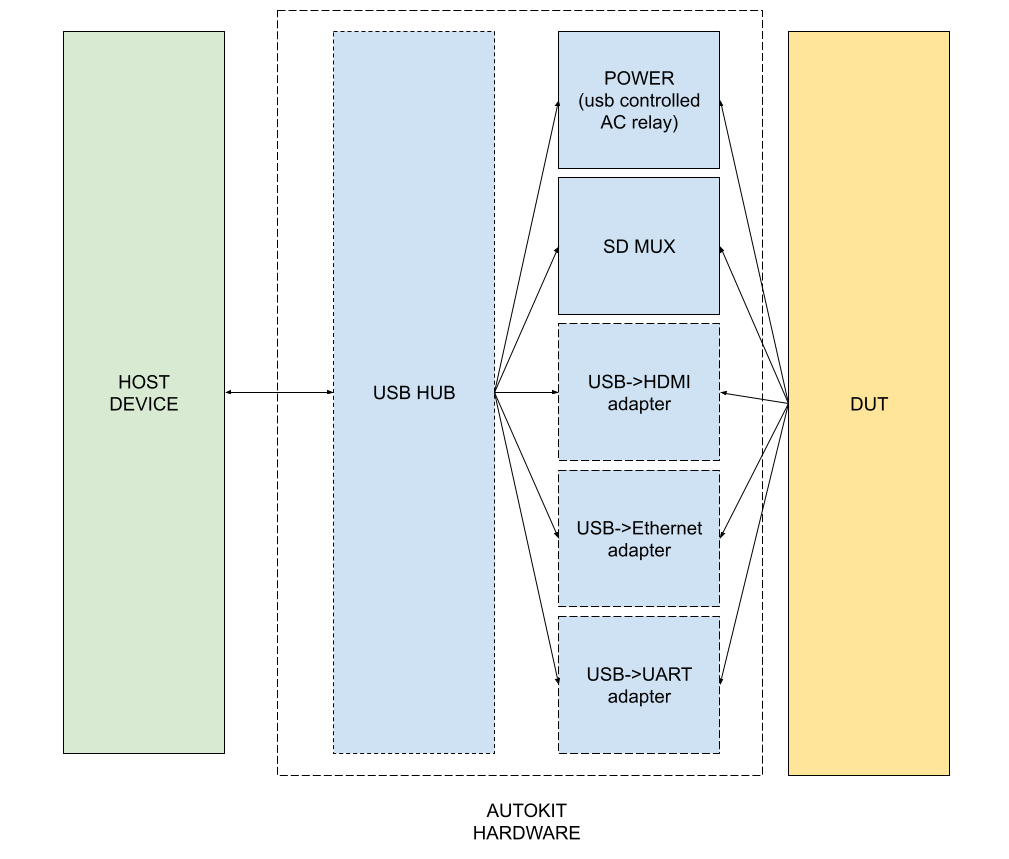

Our solution: Automation kit (AutoKit). Instead of re-inventing the wheel and designing our own board (we tried twice in the past – and will cover the journey to the current state of this project in a future post!), to perform these tasks, we composed a series of off-the-shelf devices that together offered the functionality we wanted. These devices are all readily available USB devices – meaning that we have a common physical interface that can be consumed by any test-running device that has access to a USB port.

We treat the autoKit as a suite of USB bridges to other interfaces – for example to capture the display output of a DUT, we use a USB-to-HDMI adapter. Following this philosophy means that whatever interface is on the side of the DUT that we wish to interact with, we have a common interface on the side of the autoKit in which to do so.

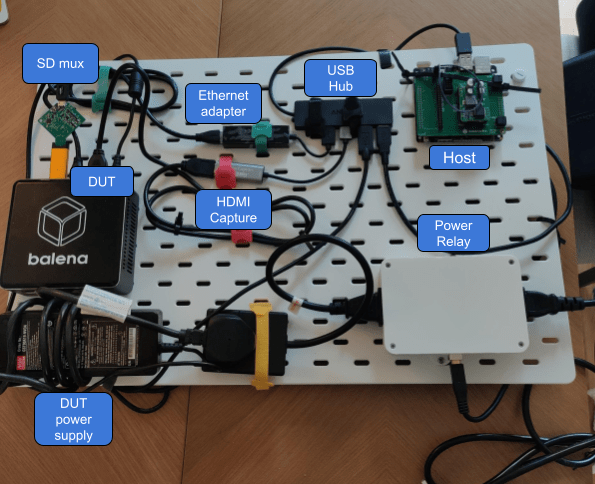

What that looks like in reality is this (this is our current beta):

The autokit allows us to have some automated fingers, eyes and ears on the DUT, and a simple software API to control it. What’s better, all hardware components involved in building an autokit are commonly available to be bought off-the-shelf, while all software is open source.

QEMU – Testing on virtualized devices

However, we quickly ran into resource issues when running all tests only on physical DUT’s using AutoKit, causing large queues for test jobs and slow feedback. Supply chain issues made this worse, and it became impossible to buy devices to test on. We also ran into problems where we would be getting false negatives, leading to time lost determining whether the OS. the autoKit, or the DUT were at fault for a failed test.

In order to mitigate both of these issues, we started to utilize testing on virtual DUT’s using QEMU. Testing on virtualized devices allows for greater horizontal scaling, and ensures a reproducible and deterministic environment. It can act as a sanity check – you can instantly detect bugs that are real if it appears in this environment, without having to ensure it’s not a problem with the hardware.

Developing this QEMU workflow helped us improve our “generic-

Leviathan – Our OS testing framework

The autoKit provides us with functionality to control the Device Under Tests (DUT) with a simple API, and provides the physical layer of our testing stack.While our virtualized devices speed up the process of testing and enables us to scale horizontally. However in order to integrate these into our CI, write, and run tests against balenaOS, we need a software layer above it to handle certain tasks. Leviathan is the name of our OS testing framework, and it handles the following jobs:

- It provides an abstraction layer that lets you run the same tests, with the same API, against either Physical DUTs, or virtual, QEMU DUT’s. Virtualized testing has been invaluable, as it provides quicker feedback, and is infinitely scalable.

- It provides a structure in which to write tests, and commonly used helper functions to prevent the team from having to re-write the same code over and over

- It sends tests and OS images to the AutoKits in our testing rig worldwide.

- Pulling back results after the tests complete & completing the continuous integration loop to approve or reject a balenaOS release.

Using AutoKit, QEMU and Leviathan to test balenaOS

Given the physical ability to flash and communicate with a DUT using AutoKit or QEMU, and the ability to orchestrate and run test jobs on the appropriate hardware using Leviathan, we can now begin to test balenaOS!

Now the question remains: what do we test? BalenaOS is an operating system composed of many open source components, such as Network Manager, OpenVPN, Chrony, and the balenaEngine. Each of these components have their own test suite that is used to verify that the component behaves as expected before changes are merged.

But, we didn’t want to test those things again in isolation. As the OS is a composition of components, we have written integration level tests that test the behavior of the system as a whole.

We have 3 test suites that test balenaOS as a whole:

- Unmanaged OS test suite: This test suite verifies that balenaOS works as expected by itself – in isolation from balenaCloud. It tests that all advertised features of the unmanaged operating system work as intended.

- Cloud test suite: This suite tests the OS integration with balenaCloud. For example, that devices can be moved between fleets, SSH access via the balenaCloud proxy works, that new releases can be pushed to the device, deltas and so on.

- Host OS update (HUP) suite: the ability to update balenaOS remotely, over the air is a key feature of balenaOS – this test suite verifies not only that this mechanism works, but simulates failed HUP’s and checks that our critical rollback mechanisms work correctly & the device is working properly after it.

BalenaOS is a Yocto-linux based operating system with the “core” layer that builds balenaOS called meta-balena which is available in the meta-balena repository. In addition to being composed of the meta-balena layer, the OS for each device includes a device specific layer. The balenaOS test suites run on every PR in both meta-balena and device-type repositories.

Every pull request in the meta-balena repository builds balenaOS for each device-type we support. This helps in two ways: firstly, we know the change hasn’t broken the balenaOS build. Secondly, it gives us balenaOS artifacts to test against on our leviathan workers. After the build is successful, this draft release of balenaOS is tested using virtualized QEMU DUT’s, for each architecture that we support in Leviathan.

The benefit for testing using a virtual worker is the scalability & consistency it offers. Changes are frequent in this repo, and being able to check that the OS works in the way we expect – as a whole, independently from each device type is critical at this step. Virtualized testing prevents resource bottlenecks and helps us scale our testing capacity to provide faster, consistent feedback on pull requests. If the tests fail here, we can be confident that there is an issue with the OS, not with the hardware.

Once the leviathan tests pass, the pull request is approved and merged. Our dependency management tool, Rennovate, automatically opens PR’s in each device-type repo, bumping that device-type’s meta-balena version to use the updated changes.

Leviathan automatically triggers the same test suites on relevant physical devices connected to autoKits. Once these tests pass, the PR is approved and merged, finally releasing a new balenaOS version for that device type into production!

And that’s just one balenaOS version being released for a single device-type, the process has been scaled to release 20+ balenaOS versions fully automatically. We make this all possible using our autokit rigs which are deployed worldwide.

Autokit rigs – Scaling our tests

Now we have our tests, and our mechanisms to run them, it’s a matter of setting up lots of autoKits connected to lots of DUT’s so we can run tests and automatically release new versions of the OS on as many devices as possible!

Physically, this means setting up housing, in our case, a giant shelf, and plugging in several autoKit tiles. We created autoKits to be general purpose kits that can be assembled without a lot of expertise and provide folks a LEGO like building experience.

This picture shows the rig setup in one of our offices, we also have smaller rigs of autokits distributed in different locations. Our test framework doesn’t care about where they are, and sees all devices in a shared pool of workers.

This is where balenaCloud itself proved to be very useful. Each autoKit is actually a balena device, and all of them, independently of their location, are part of the same balenaCloud fleet. Our test framework can therefore find them, talk to them, and manage them via our own product! If we want to add a new autoKit to the rig, all we have to do is provision the host device with a balenaOS image configured for the fleet, plug it in, and we instantly have a new worker in the pool. This lets us scale up our physical workers easily.

Automated testing in action

While this workflow is ongoing work in progress to improve, the gains are already rewarding. Manually testing balenaOS used to take hours for our engineers to complete for each device type for every release. It also meant that we had to “batch” release the OS, instead of “stream” updates to it. Fixes that were implemented might have taken months of going back and forth between teams to test, deploy and get feedback on. While there are still growing pains, our hardware-in-the-loop testing strategy has reduced our release cycle from weeks to a few hours, giving more time to our OS team to focus on development of new features.

One recent example: we had a customer contact us via our support channels with an issue they had been facing with our redsocks feature in the OS. A fix was submitted by our engineers, and the next day, with no manual intervention, the fix was tested on the actual hardware and deployed to a new production OS release. This is a big win for both our customers and us, and we are working on making this even smoother, as well as scaling it up in the near future.

Here’s what you can expect to see in the future!

Our aims going forward for the os-testing pipeline, and the tools that compose it are:

- Improve the hardware of AutoKit to simplify its setup and deployment, allowing us to scale up in a more tidy manner. We are looking into server rack mounts to be able to host these tiles in an organized and reproducible way.

- Scaling up our testing efforts to support more device types.

- Improving the consistency and user experience of our testing framework.

- Making AutoKits available for customers.

- Expanding AutoKit to be used in other use cases – for example testing fleets and blocks on real hardware.

Building in the open, sharing our work

Vipul recently presented the topic Testing 100’s of OS Images with Jenkins at CDCon + GitOpsCon 2023, which talks about the journey Balena takes to build, test and release balenaOS releases from pull requests to production using Autokit and how even you can use it in your work. We look forward to sharing more about our project in the future to help folks build their hardware-in-the-loop pipelines without reinventing the wheel.

Start the discussion at forums.balena.io