From my earliest conversations with balena, it was clear my role would be to help integrate robotics tools such as ROS (Robotic Operating System) with the balena ecosystem. I found this as the perfect excuse to start building upon a decade-long dream: an accessible robotics platform that can be used to teach, learn, and develop for years to come.

In this post, we’ll talk about fleets of robots and how we plan on using balena and ROS to provision and manage them. Read on for more background information or jump to the details about our robotics block.

Why work on ROS?

In terms of hardware, open source projects like Arduino and Raspberry Pi, and vendors like Adafruit, Sparkfun, and Seeed radically democratized access to affordable hobby robotics. The industrial segment is a growing market with powerful players and plenty of options. What unites these segments is ROS, a massive open source effort to create a standard for robotics software.

Our mission at balena is reducing friction for IoT fleet owners. With this series of robotics blog posts, I want to reduce friction for anyone (from professionals to hobbyists) wanting to create one or more robots using ROS and balena.

As mentioned, this post talks more about the tech stack and my proposed, work-in-progress robotics block. In future posts, we’ll talk about hardware: our development setup and how you can build the same robots yourself.

Background

I’ve been a maker and DIY enthusiast for as long as I remember. When I started my interest in robotics, I and many others spent tons of time on a forum called letsmakerobots.com. I absolutely loved that site. It had everything a young inexperienced robotics enthusiast could have ever dreamed of. People would sometimes post about these robots they had built, and post updates about them for years. Here’s an example of one such post that’s still getting updated. This planted the seed of the idea of building an extendable robot base that could be customized and upgraded as much as possible.

During these years I had some opportunities to teach robotics. In 2013 ,with a couple of friends I had met in robot competitions, we organized (maybe) the first robotics camp in Romania. Later, in 2015, together with a physics teacher from my high school, we started an extracurricular robotics class on weekends. I went off to study at university, but the project took off, and over the years it has developed into an amazing crew of talented teenagers that build great competition robots.

In both of those cases, a lot of energy went into researching kits that students could assemble and use through different projects. I’m happy to continue to work on ways to increase accessibility to robotics with my work on ROS at balena.

ROS 101

Before we can get into that we need to understand our tools and frameworks, so let me give you a brief overview of ROS, the Robot Operating System.

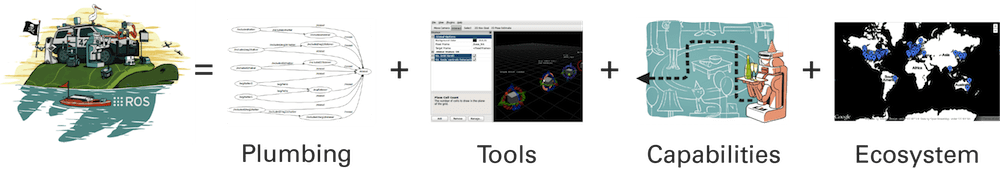

ROS is best described as a collection of software for robotics and the ecosystem around it:

* Plumbing describes the ROS computation graph: the way the different parts of ROS talk to each other.

* Tools include things like the catkin build system, Rviz for 3D visualisation, and Gazebo for simulation.

* Capabilities are complex tasks that involve software from multiple packages and sources. Some examples would be SLAM, or inverse kinematics. These are crucial building blocks for more complex robots.

Ecosystem

The ROS ecosystem is a large and diverse group or organizations and individuals, including academia, enterprises such as ABB and Boston Dynamics, government and institutions like NASA and ESA, and thousands of robotics enthusiasts and makers all around the world.

Of course, there are also the very active ROS Forums, where individuals and organizations share knowledge, share their work, and show off the robots they have built. These folks are passionate about building and making, and sharing their projects with the world.

That ethos is something that we also believe in at balena, so we feel that there is a lot of overlap with the balena community and ecosystem. That is one of the primary motivations for us to undertake the development of a robotics platform in the first place.

One thing that I absolutely love about ROS is its scalability. It simply grows with your solution. Let’s take the example of SLAM. You can use the same mapping software inside a simulation on your workstation, or with a $115 USD sensor such as the RPLidar A1, or with a $8,000+USD professional unit like this one. Apart from some minor differences in configuration, everything stays the same.

Why Is this important?

I want to prove that you can prototype your idea on a hobby robot platform and move to an industrial robot as you grow. We, at balena, want to help you at every step of that journey.

Here’s how we plan to accomplish this at balena.

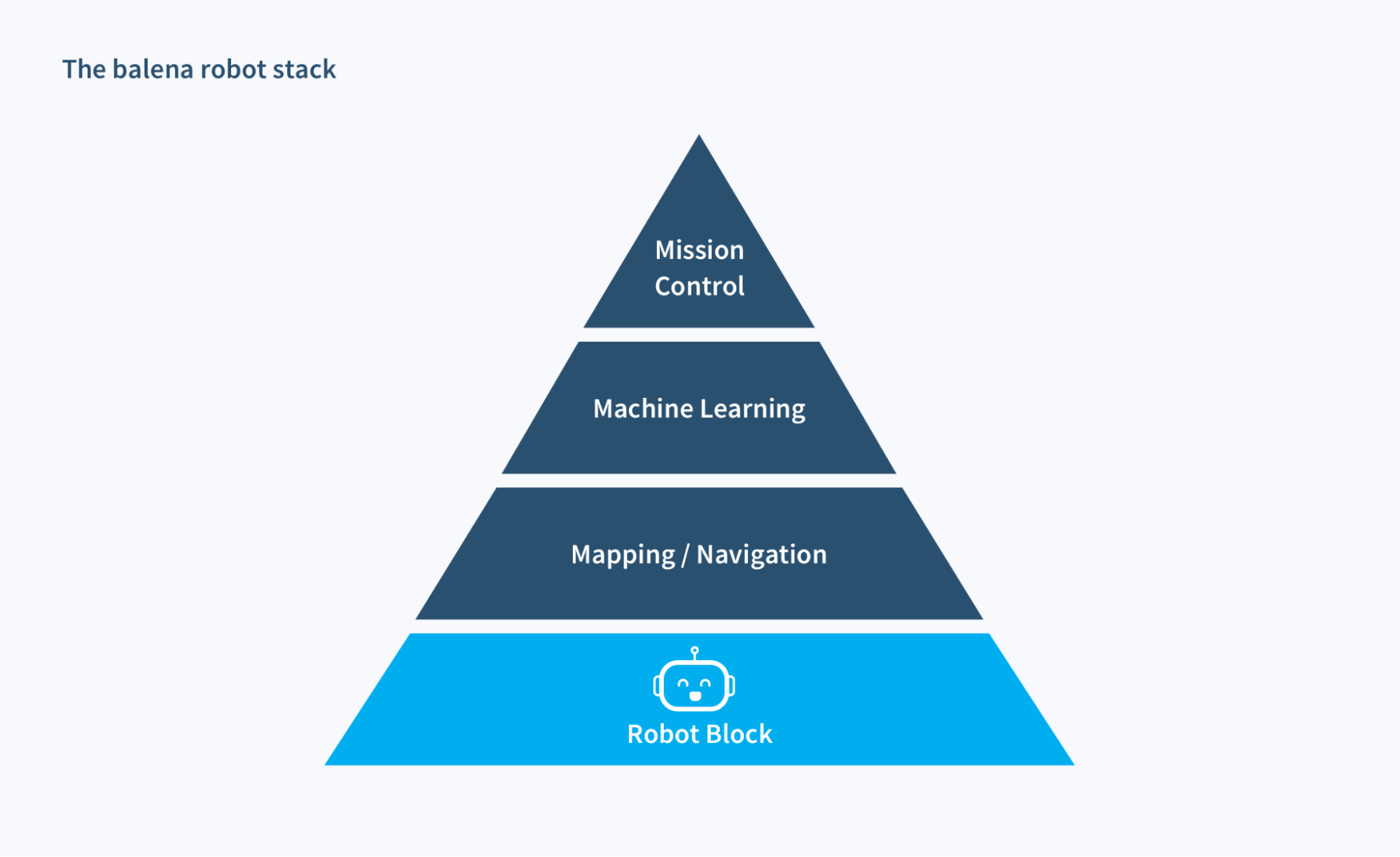

The balena robot stack

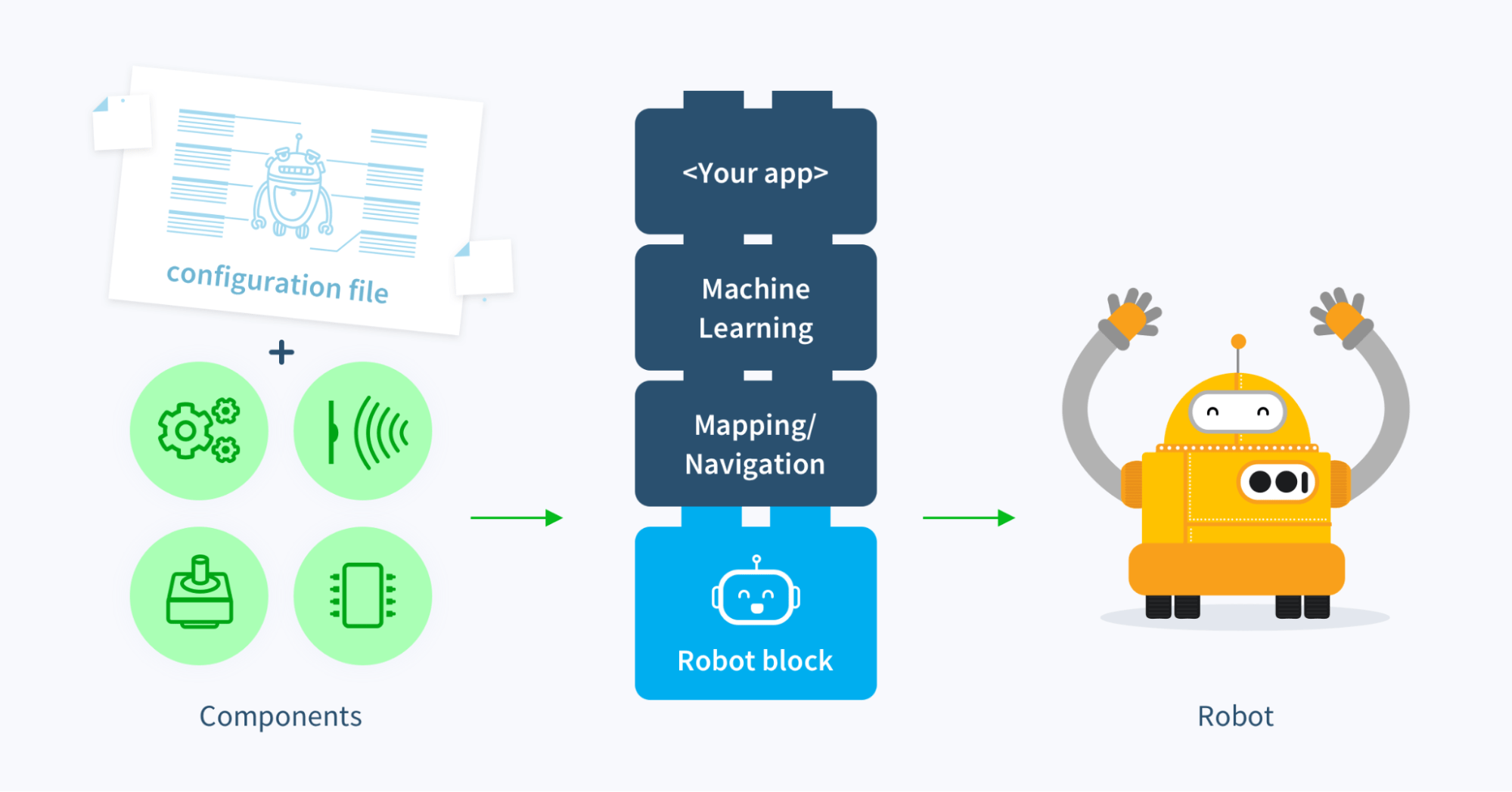

Now that we understand the basics of ROS, let’s talk about how we are planning to reduce friction for robot developers by integrating it with balena. The following is a work-in-progress of how I’d like to approach creating a balenaBlock that focuses on abstracting away some of the complexities of setting up a ROS project.

The best way to visualize the software packages and how they communicate is in a stack. At the bottom we have the Robot Block, which abstracts away the hardware components, and on top there’s Mission Control which communicates with all the other layers to enable developers to create complex robotics solutions without any explicit ROS knowledge. In between there are two types of containers:

ROS Modules encapsulate ROS functionality and are packaged together for simplicity and ease of maintenance. These modules can enable functionality like mapping, navigation, kinematics and others.

ML Modules contain the runtime libraries needed to perform neural network inference. If a robot configuration performs inference using different models at the same time (let’s say speech recognition and object detection), each model will get its own container.

Let’s take a look at each one of these layers, and what their role is.

Introducing the Robot Block

To make things even simpler, we are prototyping an upcoming balenaBlock that abstracts away the hardware configuration and provides all the necessary plumbing so you can already talk to the hardware through the ROS communication channels as soon as your Single-board Computer (SBC) finishes booting up. For now, the block is meant for mobile robots only, but we are planning to provide the same functionality for drones and manipulators in the future.

We will start by supporting a couple of common hobby modules by default, but we are planning to introduce a standard which would allow the community to add third-party support for any type of module, sensor, actuator, no matter if industrial or hobby.

We want this block to be as universal as possible, enabling robots to be part of the same fleet, even if their hardware configuration is radically different. We’d like to enable developers to change and evolve the hardware while the upper layers of the stack (computer vision, SLAM, etc) stay the same.

The actions the robot block performs are defined in a configuration file, where the components as well as the geometry of the platform are defined. On runtime, based on the robot definition the block creates all necessary publishers and subscribers to enable access to the hardware.

Some of the hardware that’s currently supported includes:

* motor drivers

* stepper motors

* hobby servos

* Sensors

* quadrature encoders

* cameras (CSI or USB)

* ranging sensors

* ultrasound

* infrared

* time-of-flight

* motion sensors

* power sensors

The implementation of the robotics block is available here, however, it’s in very early stages of development. Community feedback and observations are welcome. We’ll also be iterating and improving upon this model internally as well.

Mapping / Navigation

SLAM (Simultaneous Localization and Mapping) is a general term for the computational task of constructing a map while also keeping track of the agent’s position within the map. There are a few ways to accomplish this, depending on the sensors that are used. When referring to SLAM, in most cases, people are talking about Visual SLAM. This approach localizes agents by recognizing landmarks of spaces already explored.

Here are a few common implementations used heavily by the ROS community. For 2D-LIDAR based robots there are hector_slam and gmapping. Google’s Cartographer and rtabmap can be configured to make use of depth information from 3D LIDAR sensors, stereo cameras or solutions like Intel Realsense and Stereolabs.

Another option for SLAM is using a tracking camera, like Intel’s Realsense T265. All the computation needed to perform Visual SLAM is done by the integrated ASIC, allowing freeing up your SBC to run other tasks. This is especially useful for drones where a LIDAR sensor would be highly impractical.

Keeping Machine Learning in mind

While not a part of the ROS ecosystem, it is very common for robots today to include some kind of neural network based computer vision. To provide a full package for modern robot development, we support a couple of different approaches to deploy machine vision models on the balena robot stack:

- Edge Impulse is one of our favorite ways of deploying machine learning on edge devices. Check out our guide on using machine learning to detect matching socks, powered by a Raspberry Pi.

- Jetson Nano supports running the most popular deep learning networks with native GPU acceleration. There are already ROS wrappers for some of these networks, such as Darknet YOLOv3 for object detection tasks and DeepLab for segmentation.

- OpenVino is another great option if you are using a x86-64 based SBC on your robot. Alternatively, Intel sells a USB-based AI accelerator called NCS2 that enables OpenVino on ARM based devices. Check out our OpenVino starter project for more information.

Using balena to make ROS more accessible

We’d like to provide a high-level API that completely abstracts away all the complexity of ROS for robotics developers.

The first step is to get to an official “robot block” that provides a hardware abstraction layer, and eventually, build a solution that abstracts away the complexities of ROS. This way you can focus on your robotics solutio, instead of writing wrappers, adapters and other “plumbing” code.

Orchestrating robotic fleet intelligence

The exciting part is orchestrating fleets of robots, allowing them to pool resources together to perform a mission, a kind of IoT fleet intelligence.

Let’s say one of the robots in the fleet has a LIDAR sensor, and another has an AI accelerator, and a third has a robot arm. You can send the first one to build a map of the surrounding area and then send the other one to add the detected objects as points to the map. The third robot, the one equipped with a manipulator can pick up the objects later.

We are also planning to support robots doing tasks in parallel in the future.

What’s next?

We see our community building and creating amazing IoT, AI, and physical computing projects, so it’s not a far stretch for us to provide some building blocks to the community to enable robotics projects as well.

I’d like to note again, that the last section is more of a vision for the future than a recipe for how this is going to look like when it’s finished. Specifics and the technical implementation might be different, however the stack structure will mainly stay the same.

Also, developing and testing will take some time, this will be an ongoing project for a while. Though, I’m hoping to be able to provide a solid foundation that the community can use, modify and contribute to.

The first priority is to finalize the core development for the robot block. Later, we’ll develop the upper layers, and finally mission control. Since each one of these is an important milestone, it will be documented either by another blog article or over on the forums.

Stay updated on balena and ROS

If you want to take a deeper dive into how this platform was designed and built, check out this post on the Show and Tell category on the forums. We’ve also integrated it into the comments below.

As we develop and iterate on this concept and create the building blocks for amazing robotics projects, we want to hear from you and want you to show off anything you build using this platform!

Be sure to post on our Forums (the Show and Tell category warmly welcomes anyone who’s building something neat), so that we can see what you are creating. You can always get in touch with us on social media or other parts of the Forums as well.