The global chip shortage that started back in 2020 is an ongoing global crisis. It’s the situation where demand for semiconductor chips & parts used to build Single Board Computers (SBC) way exceeded the supply available. It was a perfect storm that forced industries to either adapt or break down. The crisis did break a few things with a surge in prices, out-of-stock notices, and driving delivery timelines for components years ahead. So it comes as no surprise that an IoT fleet management platform like balena would be expected to take the hit as well.

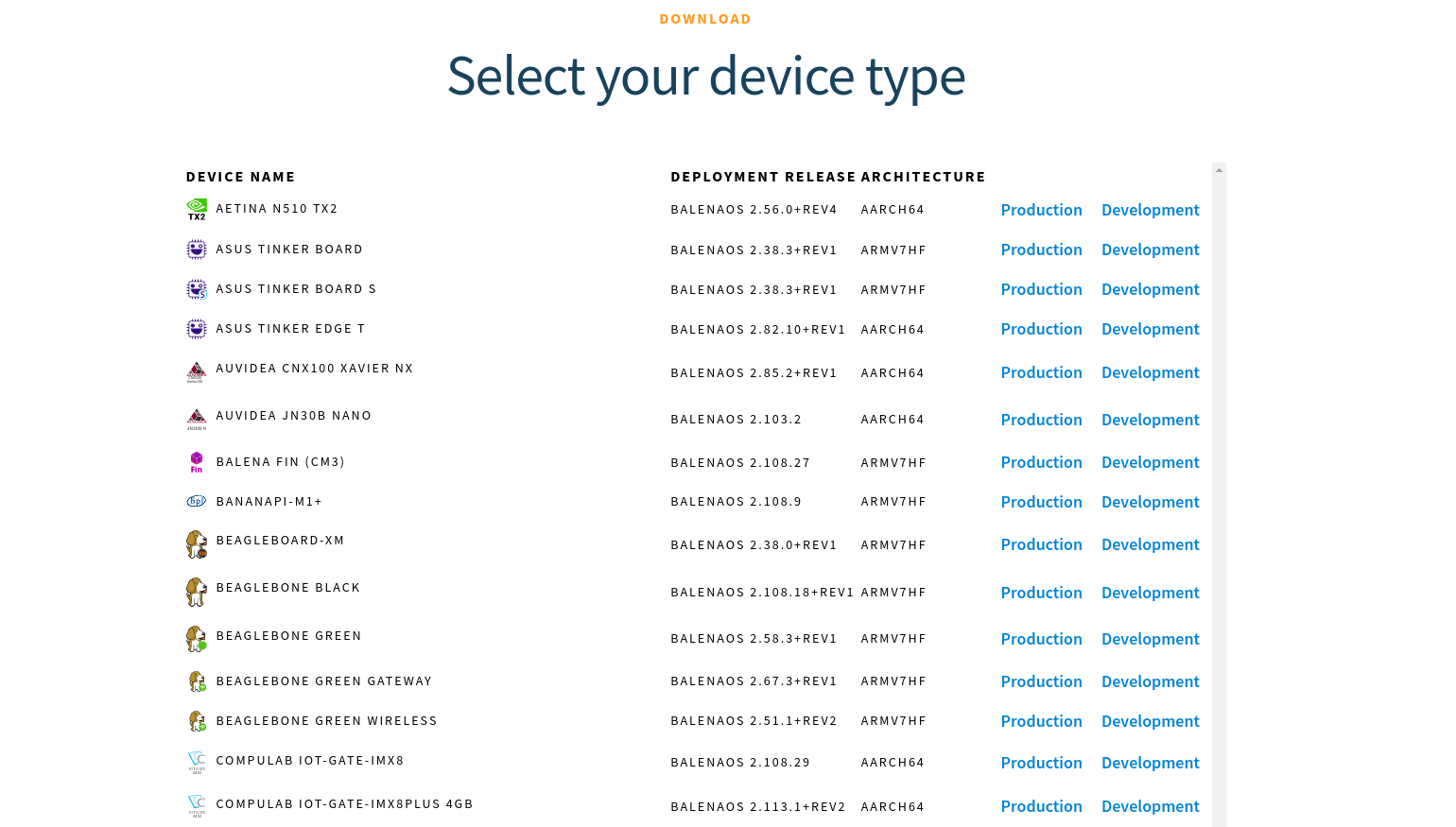

Contrary to the market trends and expectations, we scaled balenaOS support (80+ devices & counting) & added automated test capacity for new device types during this time. The shortage of certain ICs, as well as the shortage of balenaFin critically needed to build testbots, would have hampered this progress. But, our work in generalizing the testing hardware as well as automated testing using QEMU emulation helped us deflect the lethal blow of supply chain disruptions.

Growing Pains

Previously, we went over the automated testing setup for balenaOS where we briefly touched upon our overall testing setup, build pipelines, and components being used. With supply chain disruptions, our team started having issues sourcing the aforementioned devices to test against. It became harder to build testbots, scale our rig, build features, or even write new tests for balenaOS since every step in the process required hardware.

Additionally, testbots can be slow to test against. With the number of boards we support, each run of builds and tests can take up to 4-5 hrs. Depending on the setup, worker availability in the testbot rig, and device under test (DUTs), the times can vary quite a lot. Not to mention, errors and false negatives lead to retries and further exacerbate the problem. Up until early 2021, this led to large queues of test jobs waiting and the slow error-prone, development of Leviathan.

The Hardware Uncertainity

Hardware in the rig despite best efforts also needs repairs and upgrades. Even different versions of the same board model have can lead to conflicting results which makes automated testing hard not to mention reproducing test failures harder when using testbots. Example: Raspberry Pi 4’s board revision 1.5 failed to boot on balenaOS and hence we needed to source the exact board revision of the Pi 4 in order to develop, test and release a new version of balenaOS.

With our hardware testing rig continously playing catchup. It was becoming increasingly tough for us to grow the rig with all the device types we want to support balenaOS testing for.

Problems we faced

All of these problems aggravated further by supply chain disruptions and unpredictable logistics lead to the perfect storm that made a few issues clear that had to be solved.

- We need a way to infinitely scale our DUT capacity to test against going beyond the physical.

- We need a fast, reproducible, easy-to-access environment to write tests & develop leviathan features.

- We need to test and retest 70-100 build runs per day arising from balenaOS pull requests.

How did we go about solving all this, the answer was a new leviathan worker.

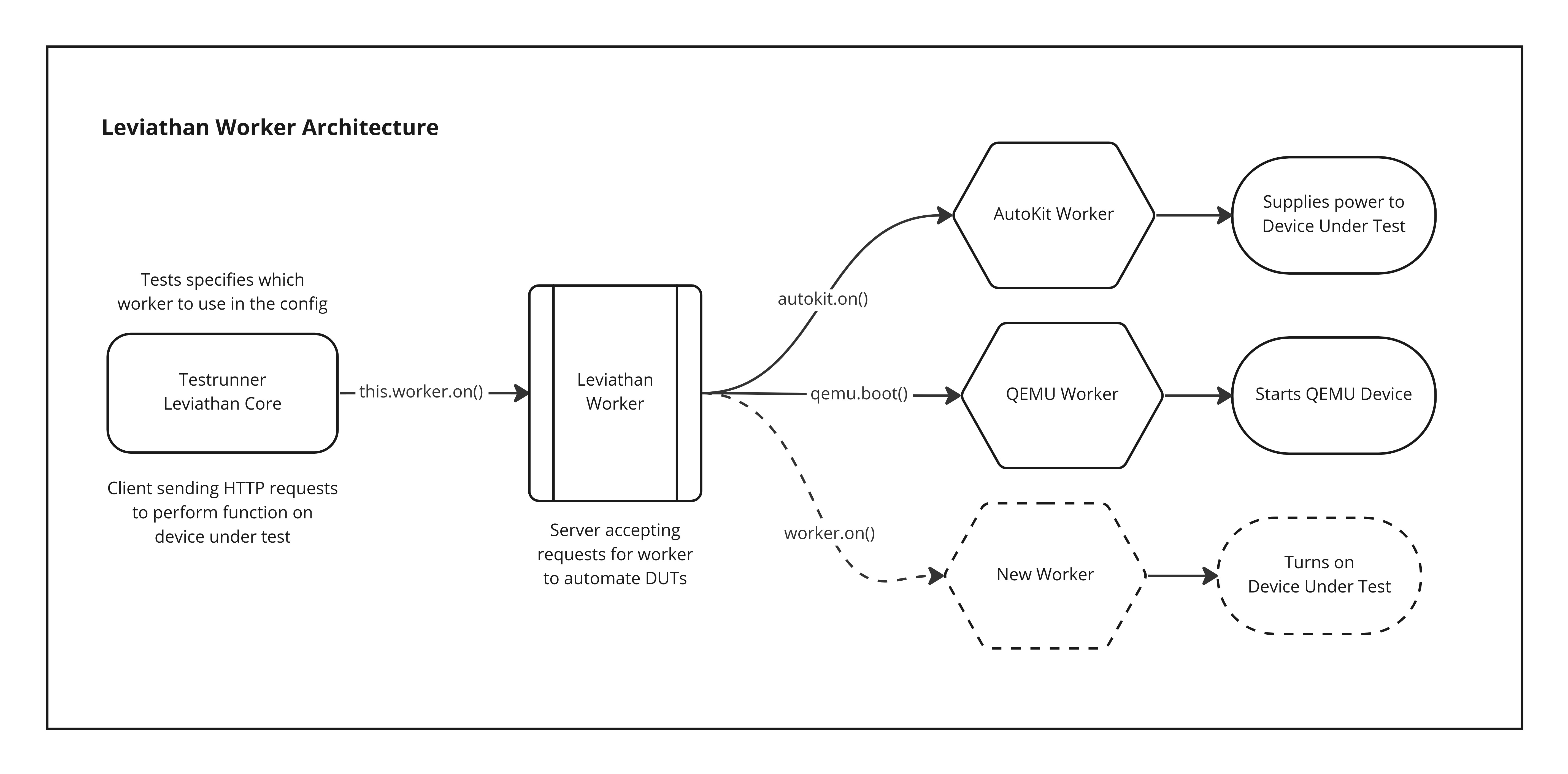

Understanding Leviathan Workers

Leviathan is an open-source hardware testing framework that provides an abstraction layer that lets you run the same test code for multiple devices under tests (DUTs) in different environments without you having to deal with the complexity. Leviathan integrates with our Yocto build workflow for balenaOS on Jenkins CI to run the tests on draft builds of balenaOS.

The features to automate device functions such as power on/off, flashing, capturing HDMI output, etc are provided by Leviathan Workers. It handles the complexity of powering on a physical device such as Jetson TX2, or a Raspberry Pi4 or an Intel nuc so you don’t have to. Each device has a different flashing process, a different power on/off process and so on. Hence, leviathan worker provides a unified API used by tests to interact with the device under test. That’s the way we keep our tests uniform no matter the DUT we have to test against. This unified API supports the following workers:

- Autokit worker

- QEMU worker

- Testbot worker (In maintenance mode, superseded by autokit)

For example, to flash our device under test (DUT), the tests use flash endpoint on the Worker API. On receiving this request, the worker calls the specific worker’s function to start the flashing process. For a Raspberry Pi3 DUT, the AutokitSDK already knows how to flash an SD card without any manual intervention using an SD Multiplexer. After the flashing is complete, the power ON endpoint can be used to supply the exact voltage needed by the device and that’s how tests run using leviathan workers. All this complexity of working with devices is solely handled by Leviathan. Now, let’s take a look at the QEMU worker.

Presenting Virtual DUTs using QEMU Worker

To solve the issues we discussed above, we turned to virtualization especially emulation. Being able to run OSes and programs made for one machine (e.g. an ARM board) on a different machine (e.g. your own PC) fits right in within our requirements. We chose QEMU as a generic and open-source machine emulator to enable boot and provisioning of draft balenaOS images with changes as virtual devices. These virtual devices act as our Device Under Test (DUT) being controlled & managed by the interface we called QEMU worker in Leviathan.

The exponential improvements with this approach have been incredibly helpful for our team during this time of crunch. Benefits, when compared to physical workers, include:

- Virtual DUTs often execute operations faster & more reliably with fewer external factors.

- Tests take almost 75% less time leading to quicker feedback.

- QEMU provides a reproducible, consistent environment to debug changes – this allows us to isolate the functionality of the OS from the hardware it’s running on. It means that if something fails, we can be certain it was a bug in the OS, rather than an issue with the hardware.

- Developers no longer require access to physical hardware to develop and run tests, which reduces both cost and friction to onboard new users to our testing workflow.

- 2x faster provisioning observed in x86 emulation. Provisioning is a bottleneck when testing on physical devices as provisioning involves, configuring the image, flashing storage medium and the slightly slower first boot, etc.

Additionally, enabling KVM hardware acceleration along with other optimizations has led to wider use-cases being supported by the QEMU worker another balenaOS testing. Emulating balenaOS genericx86-64-ext images has led to us adding support for booting balenaOS flasher-type images in QEMU as well.

Supercharging Leviathan Development

BalenaOS tests are written, and executed on Leviathan. Along with having features like finding available workers in a fleet, creating test environments, and act as a test runner, Leviathan also hosts a number of helpers to aid test execution, & provide a better developer experience.

Hence, the development of a hardware testing framework gets seriously affected when there is no hardware around to test it against. Ideally, any leviathan change is end-to-end tested running the same setup as balenaOS tests in order to make sure that changes & updates to the framework are not breaking the existing tests as well as being able to support new features needed for new tests. With the crunch coming in, the team was able to work on Leviathan using the QEMU worker with no additional hardware or setup required.

QEMU emulating a balenaOS DUT right on the system meant that faster setups, no power needed to be supplied, host network could be used inherently by the DUT and flashing was way faster as well. This resulted in quicker iterations and new features being shipped. Which helped the team write new tests for new devices needing support on the rig.

Scaling to hundreds of balenaOS builds tested every day

Each change in balenaOS from planning to development to pull request to release goes through several rounds of testing. Running either local testing to tests on Jenkins were a bottleneck in the balenaOS release cycle. Testing the OS builds per pull request for existing 80+ device types that we support and many more in the queue resulted in us always chasing increased test capacity, automating what we can, and optimizing the test suites themselves.

During the chip shortage being blocked by hardware unavailability and uncertainty among the team. BalenaOS’s meta-balena tests were the first to use the new QEMU worker tests for atleast meta-balena repository pull requests. With the scaling of our Jenkins compute capacity, we were able to horizontally scale the system to run multiple leviathan QEMU tests achieving concurrency & even smoother workflows. No more queues of PR’s waiting to be tested, more PR’s getting merged and frequent OS releases for our customers.

Using the QEMU worker also meant relieving the stress on our hardware rigs having only 2 or at max 3 DUTs of each type connected to autokits. With us supporting over 20-25 device types for automated testing, this can easily result in the need for over 50-100 devices in the rig. Hardware which again was non-existent during the peak of the supply chain disruptions.

All the advantages of QEMU testing lead to a richer developer experience and ultimately engineer’s confidence in the tests. Testing operating systems isn’t easy but we are doing what we can to release more OS versions confidently. With time, the system has actually made all our efforts worth it by finding critical bugs in balenaOS and developers writing new tests for features every day.

They see us testing, we keep on building

Virtualization has truly been the ultimate tool to wield in your workflow for development, testing, automation, and even deployment to help in not just surviving but thriving in the hardware shortage. The work done by the team truly makes this a chip shortage success story. We hope with this post to share our knowledge, ideas, and even mistakes to help folks build scalable solutions & unlock even higher potential when using virtualized workloads in their projects no matter your skill level.

Looking forward to seeing more thoughts from people and how we can improve on our setup! Feel free to ask any questions!